Back when I began taking dietetics courses, I kinda glossed over the way dietary recommendations are set -they were just a bunch of acronyms to memorize. The older I've gotten and more i've been involved in the literature, the more I see them as some of the most critical things for clinicians to understand, because they're often misinterpreted (in ways that I have been equally guilty of). This has been happening a good bit in the literature recently, as it pertains to vitamin D. I'll specifically be referencing a paper and relevant letter (1,2) in the open-access journal, Nutrients (from the same publishing group that allowed the Seneff glyphosate gish-gallop paper to be a thing).

Both this publication and the letter make 2 critical assumptions:

"To be clear, the goal is not, and should not be, to assure that 97.5% of the population exceeds the serum value linked to the RDA. Doing so would shift the distribution to a higher level that is associated with increased risk for adverse effects "

This is the critical error that Heaney and Veugeler make - they measure the amount of vitamin D that would need to be taken in to get everyone in the population up to the RDA blood level - but by definition, that amount is exceedingly high for most.

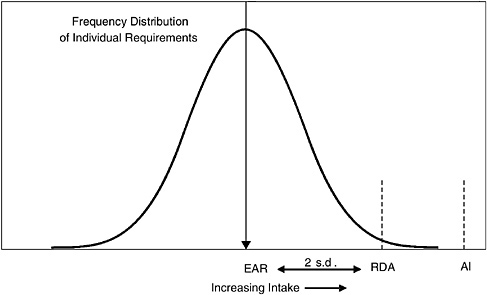

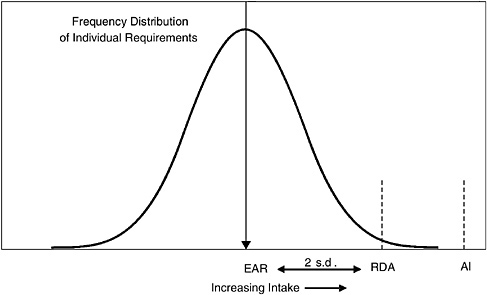

This tends to be a common mistake, especially coming from a clinicians perspective. If you look at how DRI's are suggested to be used (5), we tell people to plan a healthy diet aiming for the RDA. But when looking at a population and assessing an individual's needs based on some biochemical data (e.g. 25OHD), it's suggested to use the Estimated Average Requirement (EAR) and other clinical markers to determine the possibility of inadequacy. It's critical for practitioner's to understand that we can't just assume everyone in the population needs the RDA, because by definition, it's more than needed by 97.5 percent of the population.

This is a pretty common occurrence/mixup in the literature. when people claim that a higher blood level is better for "xyz" outcome. Take the Endocrine Society's clinical practice position paper for Vitamin D (notice Heaney is on this paper too) - they provide guidelines that are suggested to help all age groups to attain levels of 30ng/mL. They deviate from the way nutrition recommendations are set by the IOM, though this level of 30 often gets compared to the IOM's 20. They are not comparable though because the IOM does not recommend everyone in the population hit 20ng/mL - this is a value at the end of a distribution, not a clinical recommendation.

This issue isn't unique to vitaminD. It often happens for protein as well - virtually every study I've seen that suggests you need a higher level of protein suggests that in relation to the RDA for protein (granted they are often suggesting higher levels of protein for outcomes not related to the RDA outcome of nitrogen balance). Again, here, .8g/kg-bw is more than most in the population are suggested to need to stay in nitrogen balance. Note: I use suggested and rather conditional language throughout this because Darwin-forbid you ever read the DRI's and see the sample sizes that they are based on...

I encourage anyone interested in the use of the DRI's to read the AND's practice paper on their application (7).

And before someone says its, yes, I know that the RDA is only for bone-health outcomes, and that many want the levels to be higher to account for potential non-bone health outcomes. When you acknowledge that the RDA is not a clinical value, and we can't just say "use 30 instead of 20!" this becomes a much more difficult issue to address. Until causal links are determined and we can determine a new EAR/RDA (if one will be needed), i'm not on the several thousand IU's of vitaminD train. This is a perfect of example of why so many have issues with 'evidence-based medicine' and this leads us into discussions about what level of evidence is required to make recommendations.

For those following the vitaminD debate, look for upcoming literature on:

1. RCTs to establish causal outcomes related to vitaminD

2. A greater understanding of how genetic variation contributions to D status - genetic epidemiological studies can be a great tool here

3. Vitamin D distribution, sequestration, and mobilization, particularly in the obese - a recent PloSOne study suggests that obesity is a major confounding factor in association studies between vitaminD and cardiovascular disease

4. The amount of D needed to raise blood levels after being deemed deficient (i've heard a number of anecdotes that individuals will take large amounts of vitaminD w/o seeing a blood level change, and then you'll get a massive increase if you keep taking that level.)

5. The role of other sterols formed from sun exposure not formed when taking d3 e.g. tachysterol, lumisterol

6. Further investigations of the multiple studies showing a J-shaped curve with vitamin D and mortality outcomes

7. And related to #6, Vitamin D's interactions/co-association with other nutrients, like retinol

8. An overall greater understanding of Vitamin D's endocrine vs paracrine effects, and the differences between vitamin D2 and D3 in promoting these.

9. One of the best ways, in my opinion, to decide whether to follow up on potential associations is to use Mendelian Randomization, to see if we can infer causality from associations. One of the areas that MR suggests research dollars should be funneled towards is vitamin D and regulation of blood pressure/hypertension. (8)

1. http://www.ncbi.nlm.nih.gov/pubmed/25333201

2. http://www.mdpi.com/2072-6643/7/3/1688/htm

3. http://ods.od.nih.gov/Health_Information/Dietary_Reference_Intakes.aspx

4. http://www.nap.edu/openbook.php?record_id=11767&page=129

5. http://www.ncbi.nlm.nih.gov/books/NBK45182/

6. http://press.endocrine.org/doi/pdf/10.1210/jc.2011-0385

7. http://www.andjrnl.org/article/S0002-8223(11)00285-9/abstract

8. http://www.thelancet.com/journals/landia/article/PIIS2213-8587(14)70113-5/abstract

Both this publication and the letter make 2 critical assumptions:

- In Veugelers publication, they state that the IOM made a critical error in their statistics, and set out to: "To illustrate the difference between the former and latter interpretation, we estimated how much vitamin D is needed to achieve that 97.5% of individuals achieve serum 25(OH)D values of 50 nmol/L or more." For those unfamiliar, 50nmol/L = 20ng/mL - both are the RDA.

- In the corresponding letter, by Heaney et al, they perform an analysis of the GrassRootsHealth database, and state that "The points at which these lines intersect the lower bound of the 95% probability band for serum 25(OH)D reflect the inputs necessary to ensure that 97.5% of the cohort would have a vitamin D status value at or above the respective 25(OH)D concentration." For their analyses, they determined that 3875 IU of vitamin D is needed to achieve at least 20 ng/mL (50 nmol/L) in 97.5% of the population is lower than the estimate of Veugelers and Ekwaru.

What these analyses fail to take into account is what we all learned back in Nutrition 101. An RDA (3) is:

- Recommended Dietary Allowance (RDA): average daily level of intake sufficient to meet the nutrient requirements of nearly all (97%-98%) healthy people.

"To be clear, the goal is not, and should not be, to assure that 97.5% of the population exceeds the serum value linked to the RDA. Doing so would shift the distribution to a higher level that is associated with increased risk for adverse effects "

This is the critical error that Heaney and Veugeler make - they measure the amount of vitamin D that would need to be taken in to get everyone in the population up to the RDA blood level - but by definition, that amount is exceedingly high for most.

This tends to be a common mistake, especially coming from a clinicians perspective. If you look at how DRI's are suggested to be used (5), we tell people to plan a healthy diet aiming for the RDA. But when looking at a population and assessing an individual's needs based on some biochemical data (e.g. 25OHD), it's suggested to use the Estimated Average Requirement (EAR) and other clinical markers to determine the possibility of inadequacy. It's critical for practitioner's to understand that we can't just assume everyone in the population needs the RDA, because by definition, it's more than needed by 97.5 percent of the population.

This is a pretty common occurrence/mixup in the literature. when people claim that a higher blood level is better for "xyz" outcome. Take the Endocrine Society's clinical practice position paper for Vitamin D (notice Heaney is on this paper too) - they provide guidelines that are suggested to help all age groups to attain levels of 30ng/mL. They deviate from the way nutrition recommendations are set by the IOM, though this level of 30 often gets compared to the IOM's 20. They are not comparable though because the IOM does not recommend everyone in the population hit 20ng/mL - this is a value at the end of a distribution, not a clinical recommendation.

This issue isn't unique to vitaminD. It often happens for protein as well - virtually every study I've seen that suggests you need a higher level of protein suggests that in relation to the RDA for protein (granted they are often suggesting higher levels of protein for outcomes not related to the RDA outcome of nitrogen balance). Again, here, .8g/kg-bw is more than most in the population are suggested to need to stay in nitrogen balance. Note: I use suggested and rather conditional language throughout this because Darwin-forbid you ever read the DRI's and see the sample sizes that they are based on...

I encourage anyone interested in the use of the DRI's to read the AND's practice paper on their application (7).

And before someone says its, yes, I know that the RDA is only for bone-health outcomes, and that many want the levels to be higher to account for potential non-bone health outcomes. When you acknowledge that the RDA is not a clinical value, and we can't just say "use 30 instead of 20!" this becomes a much more difficult issue to address. Until causal links are determined and we can determine a new EAR/RDA (if one will be needed), i'm not on the several thousand IU's of vitaminD train. This is a perfect of example of why so many have issues with 'evidence-based medicine' and this leads us into discussions about what level of evidence is required to make recommendations.

For those following the vitaminD debate, look for upcoming literature on:

1. RCTs to establish causal outcomes related to vitaminD

2. A greater understanding of how genetic variation contributions to D status - genetic epidemiological studies can be a great tool here

3. Vitamin D distribution, sequestration, and mobilization, particularly in the obese - a recent PloSOne study suggests that obesity is a major confounding factor in association studies between vitaminD and cardiovascular disease

4. The amount of D needed to raise blood levels after being deemed deficient (i've heard a number of anecdotes that individuals will take large amounts of vitaminD w/o seeing a blood level change, and then you'll get a massive increase if you keep taking that level.)

5. The role of other sterols formed from sun exposure not formed when taking d3 e.g. tachysterol, lumisterol

6. Further investigations of the multiple studies showing a J-shaped curve with vitamin D and mortality outcomes

7. And related to #6, Vitamin D's interactions/co-association with other nutrients, like retinol

8. An overall greater understanding of Vitamin D's endocrine vs paracrine effects, and the differences between vitamin D2 and D3 in promoting these.

9. One of the best ways, in my opinion, to decide whether to follow up on potential associations is to use Mendelian Randomization, to see if we can infer causality from associations. One of the areas that MR suggests research dollars should be funneled towards is vitamin D and regulation of blood pressure/hypertension. (8)

1. http://www.ncbi.nlm.nih.gov/pubmed/25333201

2. http://www.mdpi.com/2072-6643/7/3/1688/htm

3. http://ods.od.nih.gov/Health_Information/Dietary_Reference_Intakes.aspx

4. http://www.nap.edu/openbook.php?record_id=11767&page=129

5. http://www.ncbi.nlm.nih.gov/books/NBK45182/

6. http://press.endocrine.org/doi/pdf/10.1210/jc.2011-0385

7. http://www.andjrnl.org/article/S0002-8223(11)00285-9/abstract

8. http://www.thelancet.com/journals/landia/article/PIIS2213-8587(14)70113-5/abstract

Intriguing post, Kevin. Although you seem to look at this issue from an RDA perspective, I wouldn’t label this as Nutrition 101. The issue with RDA is much more complicated than the definitions you’ve cited. George Beaton has critiqued the use of RDAs for individual intakes (GH Beaton, Nutrition Reviews, 2006; 64(5) 211-225) or here is a link to that paper (though not in best quality): http://ernaehrungsdenkwerkstatt.de/fileadmin/user_upload/EDWText/TextElemente/PHN-Texte/WHO_FAO_Report/Dietary_References_Beaton_Individual__NRe_2006_5_211.pdf . He stated that the variance of requirements for all nutrients expect protein, iron, and vitamin A is based on poor scientific assumptions; e.g. assumption that variability in nutrient requirement distribution = 10% CV of Basal Metabolic Rate (BMR)- dates back to 1965 FAO/WHO report on protein requirements. I think it is worth questioning the scientific validity of RDA and its applicability to the mean and variance of requirements for serum 25(OH)D in this case.

ReplyDeleteHi Banaz,

DeleteThanks for your comment. I'm very aware of the limitations of the RDAs and the controversies surrounding the vitamin D story. While we can debate how an RDA is set, that doesn't mean that we should interpret RDAs as clinical values - this is the issue I was addressing in this post. My concern is when we start leaving behind the acknowledgement that there isn't one level that is optimal for all humans, and start stating that every individual should hit some ideal level, as many are doing (Willett did this in the Roundtable discussion they had at Harvard with 2 of the committee members, the Endocrine society does this).

The blog was absolutely fantastic! Lot of great information which can be helpful in some or the other way. Keep updating the blog, looking forward for more contents...Great job, keep it up..

ReplyDeletebuy coenzyme q10